This is part 7 of the VCP6-DCV Delta Study Guide. It covers network enahncements in vSphere 6.0 including Network I/O Control Version 3 and Multiple TCP/IP Stacks. After this section you should be able to understand and configure networks in vSphere 6.0.

- Network I/O Control Version 3

- Upgrade Network I/O Control to Version 3

- Bandwidth Allocation for System Traffic

- Bandwidth Allocation for Virtual Machine Traffic

- IPv6 in vSphere 6

- Multiple TCP/IP Stacks

Network I/O Control Version 3

vSphere Network I/O Control (NIOC) allows network bandwidth allocation to business-critical applications and to resolve situations where several types of traffic compete for common resources. In vSphere 6.0, Network I/O Control Version 3 allows bandwidth reservation to a virtual NIC in a virtual machine or an entire distributed port group. This ensures that other virtual machines or tenants in a multitenancy environment do not impact the SLA of other virtual machines or tenants sharing the same upstream links.

Bandwidth can be guaranteed on Virtual NIC level, or on the Distributed Port Group which enables different use cases:

Virtual NIC level

Enables bandwidth to be guaranteed at the virtual network interface on a virtual machine

Reservation set on the vNIC in the virtual machine properties

Allows vSphere administrators to guarantee bandwidth to mission critical virtual machines

Distributed Port Group

Enables bandwidth to be guaranteed to a specific VMware Distributed Switch port group

Reservation set on the VDS port group

Enables multi-tenancy on one VDS by guaranteeing bandwidth usage from one tenant won’t impact another

Enable Network I/O Control on a vSphere Distributed Switch

To activate NIOC, right-click the Distributed Switch and select Edit Settings. From the Network I/O Control drop-down menu, select Enable.

Upgrade Network I/O Control to Version 3

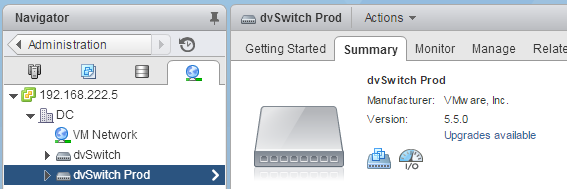

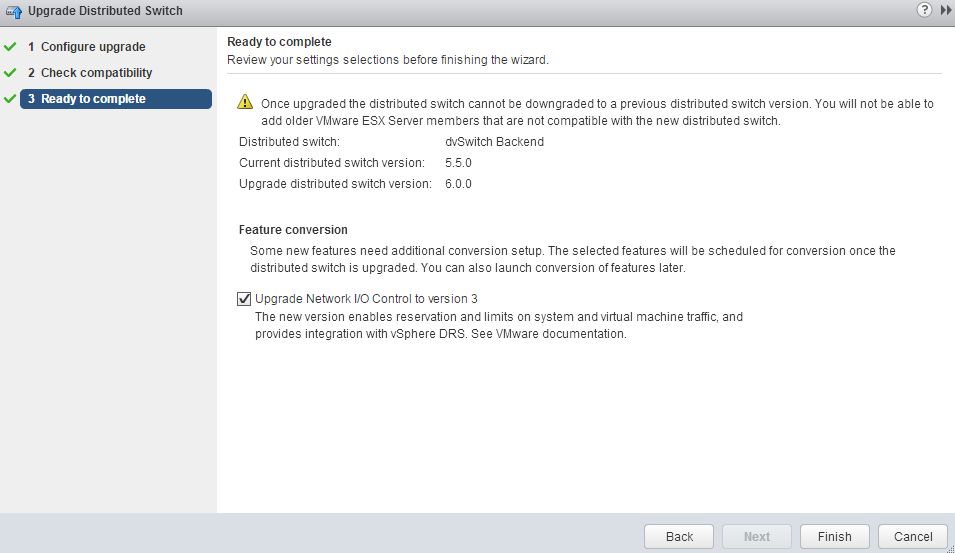

NIOC Version 3 is only supported on Distributed Switch Version 6.0.0. If you have upgraded the platform from vSphere 5.x, you have to upgrade the Distributed Switch to Version 6.0.0. You can verify the current version in the Summary tab of the Switch:

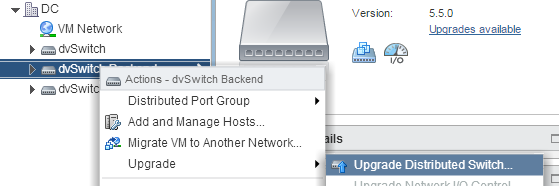

To start the upgrade, right-click the Switch and select Upgrade > Upgrade Distributed Switch

The upgrade of a NIOC to version 3 is disruptive. Certain functionality is available only in NIOC v2 and is removed during the upgrade to version 3:

- User-defined network resource pools including all associations between them and existing distributed port groups

You can preserve certain resource allocation settings by transferring the shares from the user-defined network resource pools to shares for individual network adapters. - Existing associations between ports and user-defined network resource pools

In Network I/O Control version 3, you cannot associate an individual distributed port to a network resource pool that is different from the pool assigned to the parent port group. - CoS tagging of the traffic that is associated with a network resource pool

Network I/O Control version 3 does not support marking traffic that has higher QoS demands with CoS tags. After the upgrade, to restore CoS tagging of traffic that was associated with a user-defined network resource pool, use the traffic filtering and marking networking policy.

If you do not use any of the functions above, NIOC is automatically updated to version 3, during the upgrade to Distributed Switch 6. If the upgrade detects deprecated features you have to select the feature conversion during the upgrade:

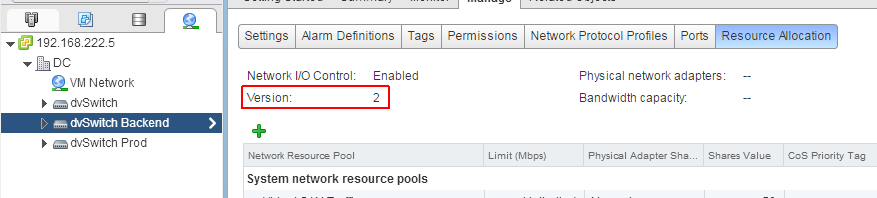

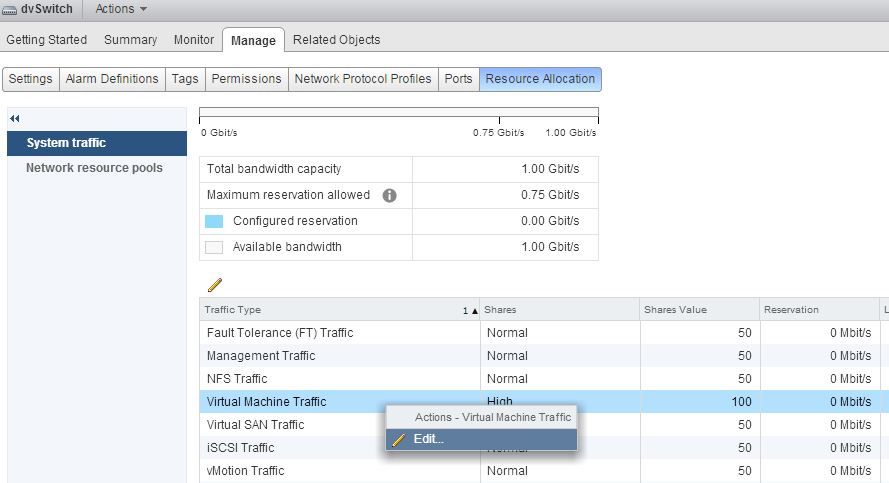

If you have a vSphere Distributed Switch 6.0.0 without Network I/O Control version 3, you can upgrade Network I/O Control later. To identify the version, navigate to Manage > Resource Allocation

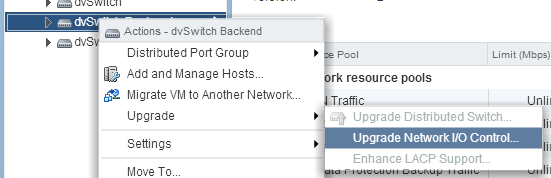

To upgrade, right-click the Switch and select Upgrade > Upgrade Network I/O Control

The upgrade process validates prerequisites and displays a warning for features that are about to be removed.

Bandwidth Allocation for System Traffic

You can configure Network I/O Control to allocate certain amount of bandwidth for the following traffic types:

- Management Traffic

- Fault Tolerance (FT) Traffic

- iSCSI Traffic

- NFS Traffic

- Virtual SAN Traffic

- vMotion Traffic

- vSphere Replication (VR) Traffic

- vSphere Data Protection Backup Traffic

- Virtual machine Traffic

Each traffic type can be allocated by shares, reservations and limits:

- Shares: Shares, from 1 to 100, reflect the relative priority of a system traffic type against the other system traffic types that are active on the same physical adapter. The amount of bandwidth available to a system traffic type is determined by its relative shares and by the amount of data that the other system features are transmitting.

- Reservation: The minimum bandwidth, in Mbps, that must be guaranteed on a single physical adapter. The total bandwidth reserved among all system traffic types cannot exceed 75 percent of the bandwidth that the physical network adapter with the lowest capacity can provide.

- Limit: The maximum bandwidth, in Mbps or Gbps, that a system traffic type can consume on a single physical adapter.

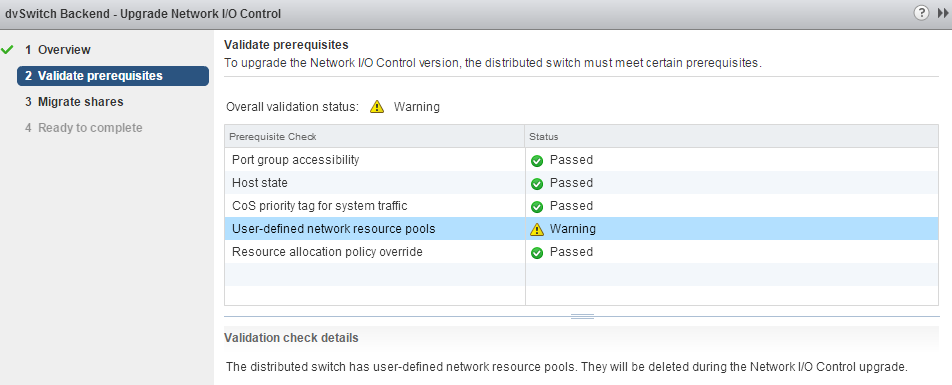

Bandwidth Reservation Example

The capacity of the physical adapters determines the bandwidth that you guarantee. According to this capacity, you can guarantee minimum bandwidth to a system feature for its optimal operation.

For example, on a distributed switch that is connected to ESXi hosts with 10 GbE network adapters, you might configure reservation to guarantee 1 Gbps for management through vCenter Server, 1 Gbps for iSCSI storage, 1 Gbps for vSphere Fault Tolerance, 1 Gbps for vSphere vMotion traffic, and 0.5 Gbps for virtual machine traffic. Network I/O Control allocates the requested bandwidth on each physical network adapter. You can't reserve more than 75 percent of the bandwidth of a physical network adapter (7.5 Gbps).

Configure Bandwidth Reservation

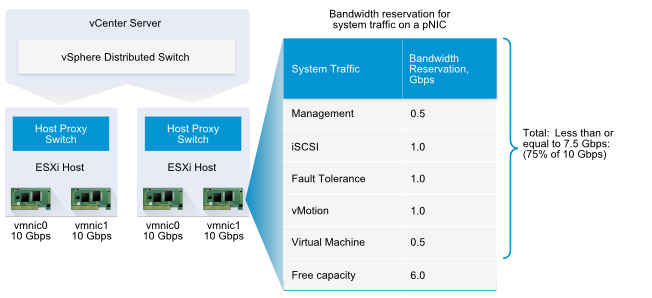

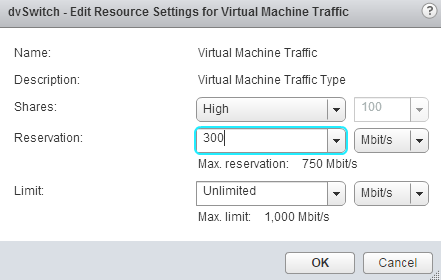

To assign Bandwidth on specific traffic types navigate to Manage > Resource Allocation > System traffic on the Distributed Switch where you want to configure the reservation. In this example we are going to set a reservation of 300Mit/s for Virtual SAN Traffic and 300Mbit/s for Virtual Machine Traffic. Right-Click Virtual Machine Traffic the and press Edit...

Assign a Reservation of 300 Mbit/s and press OK.

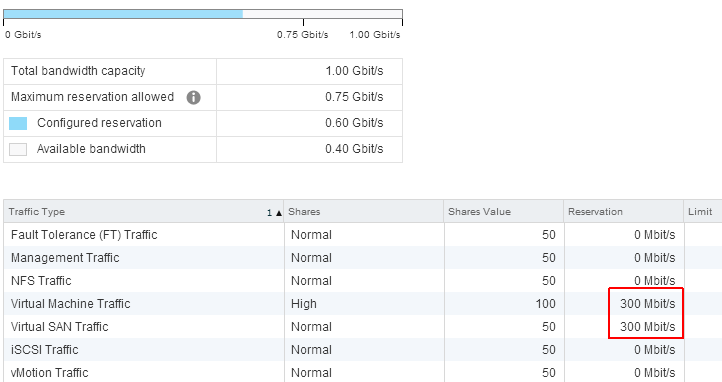

Repeat the steps for Virtual SAN Traffic and verify the configuration in the Resource Allocation Section. You should see a reservation of 300Mbit/s for both traffic types. You can also see the total configured reservation and remaining capacity:

You have successfully configured a bandwidth reservation. vCenter Server propagates the allocation from the distributed switch to the host physical adapters that are connected to the switch.

Bandwidth Allocation for Virtual Machine Traffic

Version 3 of Network I/O Control lets you configure bandwidth requirements for individual virtual machines. You can also use network resource pools where you can assign a bandwidth quota from the aggregated reservation for the virtual machine traffic and then allocate bandwidth from the pool to individual virtual machines.

Network I/O Control allocates bandwidth for virtual machines by using two models: allocation across the entire vSphere Distributed Switch based on network resource pools and allocation on the physical adapter that carries the traffic of a virtual machine.

Network Resource Pools

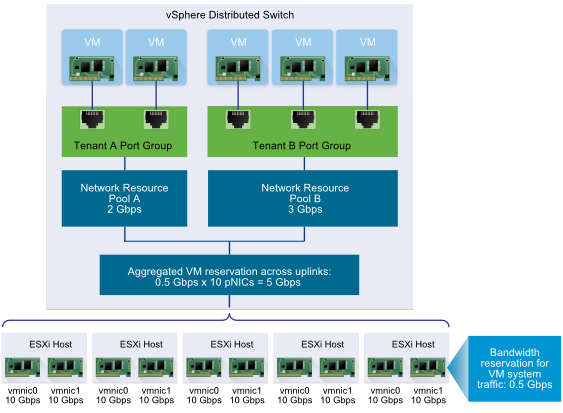

A network resource pool represents a part of the aggregated bandwidth that is reserved for the virtual machine system traffic on all physical adapters connected to the distributed switch. For example, if the virtual machine system traffic has 0.5 Gbps reserved on each 10 GbE uplink on a distributed switch that has 10 uplinks, then the total aggregated bandwidth available for VM reservation on this switch is 5 Gbps. Each network resource pool can reserve a quota of this 5 Gbps capacity. By default, distributed port groups on the switch are assigned to a network resource pool, called default, whose quota is not configured.

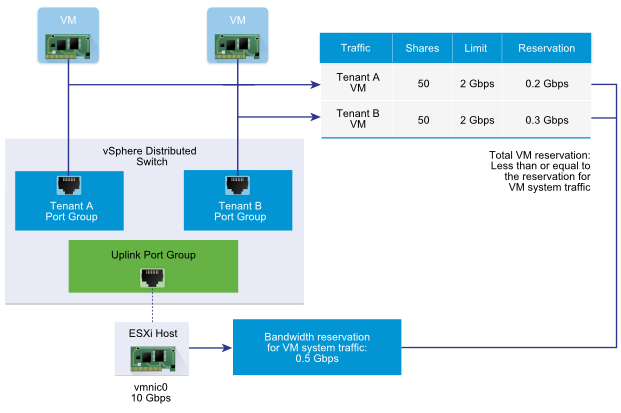

Bandwidth Provisioning to a Virtual Machine on the Host

To guarantee bandwidth, Network I/O Control implements a traffic placement engine that becomes active if a virtual machine has bandwidth reservation configured. The distributed switch attempts to place the traffic from a VM network adapter to the physical adapter that can supply the required bandwidth and is in the scope of the active teaming policy. The total bandwidth reservation of the virtual machines on a host cannot exceed the reserved bandwidth that is configured for the virtual machine system traffic.

Create a Network Resource Pool

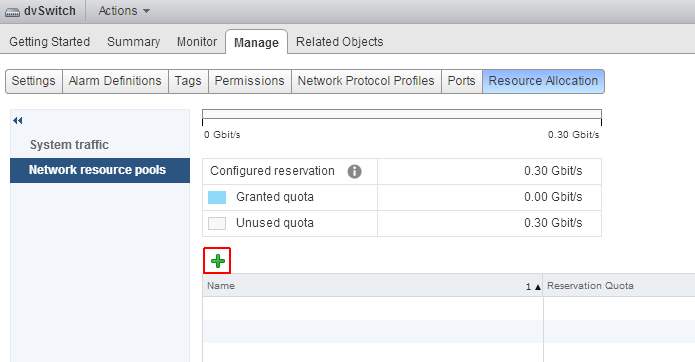

If you want to reserve bandwidth for a set of virtual machines, you have to create a network resource pool. Please note that you have to create a reservation for Virtual Machine Traffic in the System traffic section to create a network resource pool. To create a network resource pool navigate to Manage > Resource Allocation > Network resource pools on the Distributed Switch and click the Add icon:

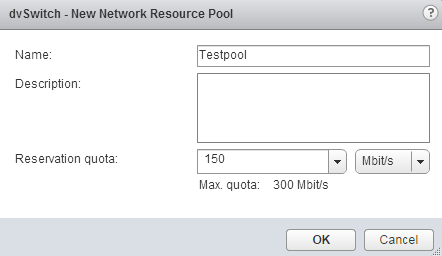

Configure a Reservation quota of 150 Mbit/s and press OK:

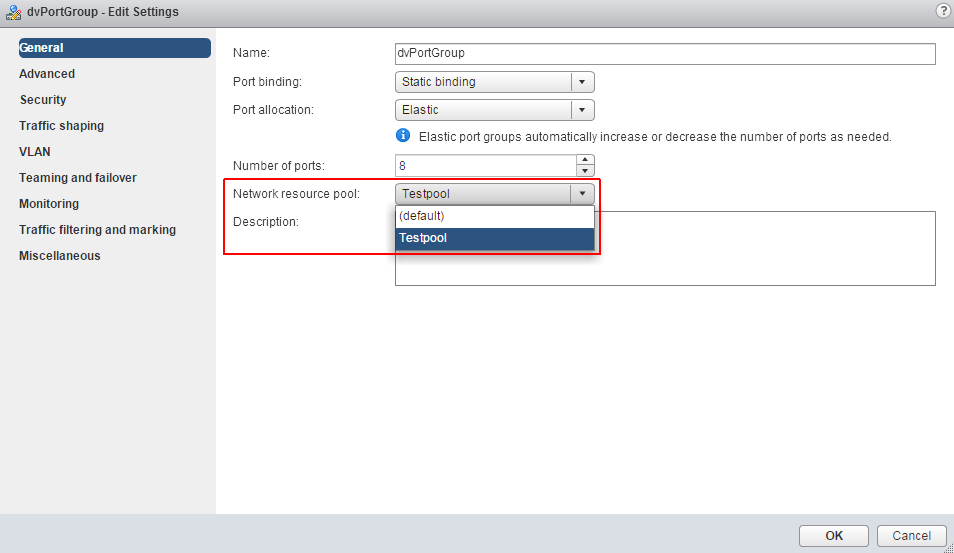

To assign the network resource pool to a port group right-click the port group and select Edit Settings... and choose the resource pool from the drop down menu.

You can verify the assignment in the Distributed Switch within Manage > Resource Allocation > Network resource pools at the bottom of the page:

Configure a Network Bandwith Reservation for a Virtual Machine

To configure a bandwidth reservation, navigaten the Manage tab of the virtual machine, select Settings > VM Hardware and press Edit.... Expand the Network adapter section and configure a reservation of 100 Mbit/s. If you provision bandwidth by using a network resource pool, the reservation from the network adapters of powered on VMs that are associated with the pool must not exceed the quota of the pool.

I/O Control allocates the bandwidth that you reserved for the network adapter of the virtual machine out of the reservation quota of the network resource pool.

IPv6 in vSphere 6

In an environment that is based on vSphere 6.0 and later, nodes and features can communicate over IPv6 transparently supporting static and automatic address configuration.

Supported IPv6 communication

- ESXi to ESXi

- vCenter Server to ESXi

- vCenter Server to vSphere Web Client

- ESXi to vSphere Client

- Virtual machine to virtual machine

- ESXi to iSCSI Storage

- ESXi to NFS Storage

The following vSphere features do not support IPv6:

- IPv6 addresses for ESXi hosts and vCenter Server that are not mapped to fully qualified domain names (FQDNs) on the DNS server. Workaround: Use FQDNs or make sure the IPv6 addresses are mapped to FQDNs on the DNS servers for reverse name lookup.

- Virtual volumes

- PXE booting as a part of Auto Deploy and Host Profiles. Workaround: PXE boot an ESXi host over IPv4 and configure the host for IPv6 by using Host Profiles.

- Connection of ESXi hosts and the vCenter Server Appliance to Active Directory. Workaround: Use Active Directory over LDAP as an identity source in vCenter Single Sign-On.

- NFS 4.1 storage with Kerberos. Workaround: Use NFS 4.1 with AUTH_SYS.

- Authentication Proxy

- Connection of the vSphere Management Assistant and vSphere Command-Line Interface to Active Directory. Workaround: Connect to Active Directory over LDAP.

- Use of the vSphere Client to enable IPv6 on vSphere features Workaround: Use the vSphere Web Client to enable IPv6 for vSphere features.

Multiple TCP/IP Stacks

vSphere 6.0 introduces multiple TCP/IP stacks, which can be assigned to separate vSphere services. Each stack operates with its own

- Memory heap

- ARP table

- Routing table

- Default gateway

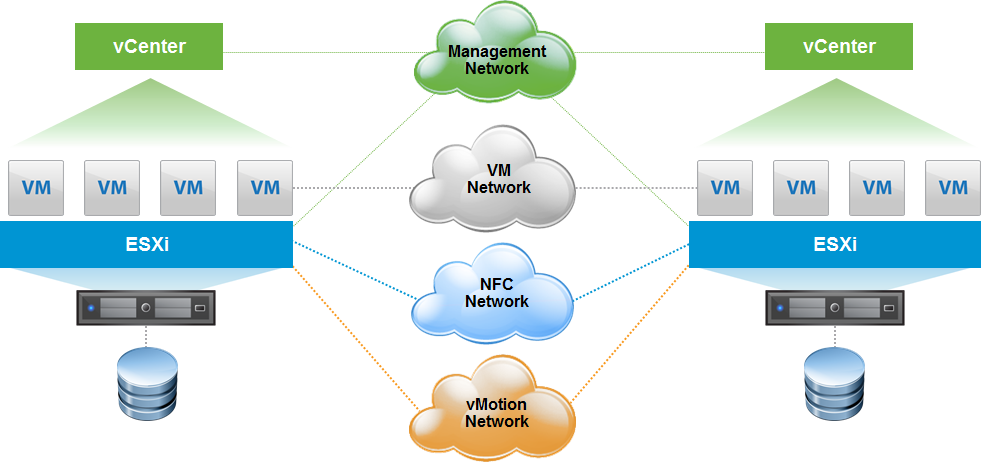

This enables finer control of network resource usage. Previously ESXi versions only had one networking stack. With separate TCP/IP stacks, operations such as vSphere vMotion can operate over a layer 3 boundary and NFC operations such as cloning can be sent over a dedicated network rather than sharing the management network.

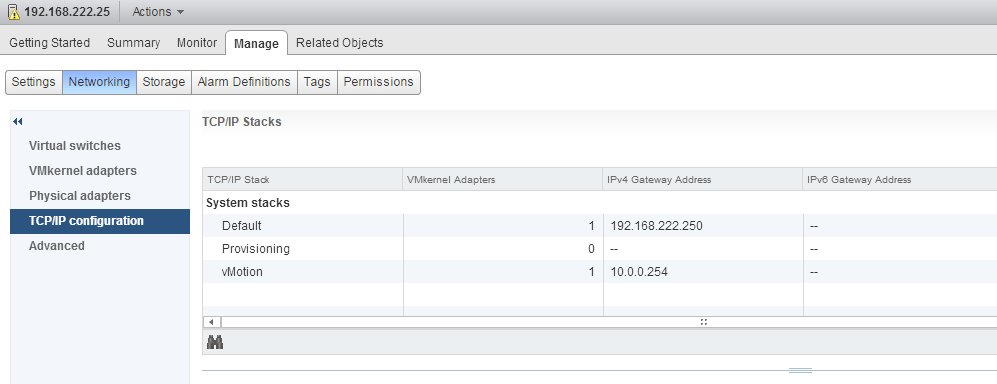

To view and change the configuration of a TCP/IP stack navigate to ESXi > Mange > Networking > TCP/IP configuration. To edit a stack, selecting it from the menu and click Edit...

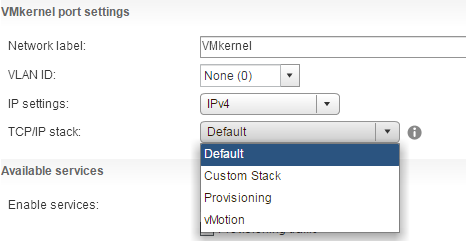

There are 3 stacks preconfigured on each host: Default, Provisioning and vMotion. You can create your own custom TCP/IP stack. Open a SSH connection to the server and run the following command:

esxcli network ip netstack add -N "Custom Stack"

The custom TCP/IP stack is created on the host. You can now assign VMkernel adapters to the stack and configure it in the TCP/IP configuration section.

VCP6-DCV Delta Study Guide

Part 1 - vSphere 6 Summary

Part 2 - How to prepare for the Exam?

Part 3 - Installation and Upgrade

Part 4 - ESXi Enhancements

Part 5 - Management Enhancements

Part 6 - Availability Enhancements

Part 7 - Network Enhancements

Part 8 - Storage Enhancements