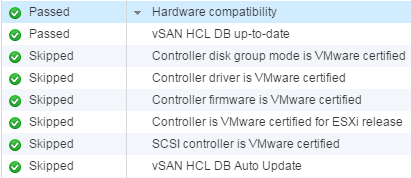

A new feature in vSAN 6.6 is the ability to silence Health Checks. In previous versions, it was already possible to disable alerts that are triggered by health checks. Silencing health checks is one step further and enables you to have a clean vSAN health. Silenced checks are displayed with a green checkmark and are marked as "Skipped".

Especially for home labs, where unsupported hardware is used, this is a great feature.

Currently, it is not possible to silence health checks with the vSphere Web Client and it is not described in the documentation. The feature is available in the RVC or by using the vSAN Management API. This article is focused on the RVC. If you are unfamiliar with RVC, see this article.

Add a check to the silent list:

vsan.health.silent_health_check_configure -a <Check ID> <CLUSTER>

Remove a check from the silent list:

vsan.health.silent_health_check_configure -r <Check ID> <CLUSTER>

The following Check IDs are available:

| Description | Check ID |

| Cloud Health | |

| Controller utility is installed on host | vendortoolpresence |

| Controller with pass-through and RAID disks | mixedmode |

| Customer experience improvement program (CEIP) | vsancloudhealthceipexception |

| Disks usage on storage controller | diskusage |

| Online health connectivity | vsancloudhealthconnectionexception |

| vSAN and VMFS datastores on a Dell H730 controller with the lsi_mr3 driver | mixedmodeh730 |

| vSAN configuration for LSI-3108 based controller | h730 |

| vSAN max component size | smalldiskstest |

| Cluster | |

| Advanced vSAN configuration in sync | advcfgsync |

| Deduplication and compression configuration consistency | physdiskdedupconfig |

| Deduplication and compression usage health | physdiskdedupusage |

| Disk format version | upgradelowerhosts |

| ESXi vSAN Health service installation | healtheaminstall |

| Resync operations throttling | resynclimit |

| Software version compatibility | upgradesoftware |

| Time is synchronized across hosts and VC | timedrift |

| vSAN CLOMD liveness | clomdliveness |

| vSAN Disk Balance | diskbalance |

| vSAN Health Service up-to-date | healthversion |

| vSAN cluster configuration consistency | consistentconfig |

| vSphere cluster members match vSAN cluster members | clustermembership |

| Data | |

| vSAN VM health | vmhealth |

| vSAN object health | objecthealth |

| Encryption | |

| CPU AES-NI is enabled on hosts | hostcpuaesni |

| vCenter and all hosts are connected to Key Management Servers | kmsconnection |

| Hardware compatibility | |

| Controller disk group mode is VMware certified | controllerdiskmode |

| Controller driver is VMware certified | controllerdriver |

| Controller firmware is VMware certified | controllerfirmware |

| Controller is VMware certified for ESXi release | controllerreleasesupport |

| Host issues retrieving hardware info | hclhostbadstate |

| SCSI controller is VMware certified | controlleronhcl |

| vSAN HCL DB Auto Update | autohclupdate |

| vSAN HCL DB up-to-date | hcldbuptodate |

| Limits | |

| After 1 additional host failure | limit1hf |

| Current cluster situation | limit0hf |

| Host component limit | nodecomponentlimit |

| Network | |

| Active multicast connectivity check | multicastdeepdive |

| All hosts have a vSAN vmknic configured | vsanvmknic |

| All hosts have matching multicast settings | multicastsettings |

| All hosts have matching subnets | matchingsubnet |

| Hosts disconnected from VC | hostdisconnected |

| Hosts with connectivity issues | hostconnectivity |

| Multicast assessment based on other checks | multicastsuspected |

| Network latency check | hostlatencycheck |

| vMotion: Basic (unicast) connectivity check | vmotionpingsmall |

| vMotion: MTU check (ping with large packet size) | vmotionpinglarge |

| vSAN cluster partition | clusterpartition |

| vSAN: Basic (unicast) connectivity check | smallping |

| vSAN: MTU check (ping with large packet size) | largeping |

| Performance service | |

| All hosts contributing stats | hostsmissing |

| Performance data collection | collection |

| Performance service status | perfsvcstatus |

| Stats DB object | statsdb |

| Stats DB object conflicts | renameddirs |

| Stats master election | masterexist |

| Verbose mode | verbosemode |

| Physical disk | |

| Component limit health | physdiskcomplimithealth |

| Component metadata health | componentmetadata |

| Congestion | physdiskcongestion |

| Disk capacity | physdiskcapacity |

| Memory pools (heaps) | lsomheap |

| Memory pools (slabs) | lsomslab |

| Metadata health | physdiskmetadata |

| Overall disks health | physdiskoverall |

| Physical disk health retrieval issues | physdiskhostissues |

| Software state health | physdisksoftware |

| Stretched cluster | |

| Invalid preferred fault domain on witness host | witnesspreferredfaultdomaininvalid |

| Invalid unicast agent | hostwithinvalidunicastagent |

| No disk claimed on witness host | witnesswithnodiskmapping |

| Preferred fault domain unset | witnesspreferredfaultdomainnotexist |

| Site latency health | siteconnectivity |

| Unexpected number of fault domains | clusterwithouttwodatafaultdomains |

| Unicast agent configuration inconsistent | clusterwithmultipleunicastagents |

| Unicast agent not configured | hostunicastagentunset |

| Unsupported host version | hostwithnostretchedclustersupport |

| Witness host fault domain misconfigured | witnessfaultdomaininvalid |

| Witness host not found | clusterwithoutonewitnesshost |

| Witness host within vCenter cluster | witnessinsidevccluster |

| vSAN iSCSI target service | |

| Home object | iscsihomeobjectstatustest |

| Network configuration | iscsiservicenetworktest |

| Service runtime status | iscsiservicerunningtest |

Example

Silence all HCL related checks in an unsupported home lab configuration. This typically includes the following Check IDs:

- controllerdiskmode

- controllerdriver

- controllerfirmware

- controllerreleasesupport

- controlleronhcl

- Connect to the vCSA with SSH

- Open RVC

# rvc administrator@vc.virten.lab

- Mark the vSAN Cluster.

This step is not required but allows you to use commands with ~vsan66 as target> mark vsan66 vc.virten.lab/Datacenter/computers/vSAN66/

- Silence health checks

> vsan.health.silent_health_check_configure -a controllerdiskmode ~vsan66 Successfully add check "Controller disk group mode is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerdriver ~vsan66 Successfully add check "Controller driver is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerfirmware ~vsan66 Successfully add check "Controller firmware is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerreleasesupport ~vsan66 Successfully add check "Controller is VMware certified for ESXi release" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controlleronhcl ~vsan66 Successfully add check "SCSI controller is VMware certified" to silent health check list for vSAN66

- Verify the status with vsan.health.silent_health_check_status

> vsan.health.silent_health_check_status ~vsan66 Silent Status of Cluster vSAN66: +----------------------------------------------------+---------------------------+---------------+ | Health Check | Health Check Id | Silent Status | +----------------------------------------------------+---------------------------+---------------+ | Hardware compatibility | | | | Controller disk group mode is VMware certified | controllerdiskmode | Silent | | Controller driver is VMware certified | controllerdriver | Silent | | Controller firmware is VMware certified | controllerfirmware | Silent | | Controller is VMware certified for ESXi release | controllerreleasesupport | Silent | | Host issues retrieving hardware info | hclhostbadstate | Normal | | SCSI controller is VMware certified | controlleronhcl | Silent | | vSAN HCL DB Auto Update | autohclupdate | Silent | | vSAN HCL DB up-to-date | hcldbuptodate | Normal | +----------------------------------------------------+---------------------------+---------------+

In order to see if the changes worked, go to the cluster > Monitor > vSAN > Health > Retest and it should turn the previously failed tests green :-)

I have tried the commands (vsan.health.silent....) but in rvc they are not available.

root@vcenter [ ~ ]# rvc administrator@n00b.local@localhost

Install the "ffi" gem for better tab completion.

password:

0 /

1 localhost/

> vsan.health.

vsan.health.cluster_attach_to_sr vsan.health.cluster_repair_immediately

vsan.health.cluster_debug_multicast vsan.health.cluster_status

vsan.health.cluster_load_test_cleanup vsan.health.hcl_update_db

vsan.health.cluster_load_test_prepare vsan.health.health_check_interval_configure

vsan.health.cluster_load_test_run vsan.health.health_check_interval_status

vsan.health.cluster_proxy_configure vsan.health.health_summary

vsan.health.cluster_proxy_status vsan.health.multicast_speed_test

vsan.health.cluster_rebalance

> vsan.health.

did I miss something?

Your commands were a bit off and either need the vsan cluster path added on to the end or you have to drill down to the vSAN cluster level then run the commands with a "." to tell it to run from where you are.

More simply put, when you see

0/

and 1 localhost

you need to "cd 1" then do "ls" and select the , then ls again and select then ls again and it should list the then cd to that.

At that point you need to then follow the formating like this,

vsan.health.silent_health_check_configure -a diskbalance .

that would turn off disk balance from the vSAN health check. Also the period you see at the end after the space is supposed to be there. That is telling it the vSAN is where we already are (because we drilled down to the vSAN up above)

forget my last comment!

I forgot to upgrade today - too many problems :)

sorry