vSAN 6.6 RVC Guide |

The "vSAN 6.6 RVC Guide" series explains how to manage your VMware Virtual SAN environment with the Ruby vSphere Console. RVC is an interactive command line tool to control and automate your platform. If you are new to RVC, make sure to read the Getting Started with Ruby vSphere Console Guide. All commands are from the latest vSAN 6.6 version.

The third part explains commands related to the object management in vSAN. These commands are used for troubleshooting or reconfiguration of objects. They also provide an insight on how vSAN works.

- vsan.disks_info

- vsan.disks_stats

- vsan.cmmds_find

- vsan.vm_object_info

- vsan.disk_object_info

- vsan.object_info

- vsan.object_reconfigure

- vsan.vmdk_stats

To shorten commands I've used marks for the vSAN enabled Cluster, a Virtual Machine and an ESXi Hosts participating in the vSAN. This enables me to use ~cluster, ~vm and ~esx in examples:

/localhost/DC> mark cluster ~/computers/VSAN-Cluster/ /localhost/DC> mark vm ~/vms/vma.virten.lab /localhost/DC> mark esx ~/computers/VSAN-Cluster/hosts/esx1.virten.lab/

Object Management

vsan.disks_info [-s] ~host

Print physical disk information from a host including disk type (SSD or MD), size and state. This command allows to identify whether disks are eligible to be used for vSAN.

- -s, --show-adapters: Display adapter information in the state column.

Example 1 – Disable VSAN on a HA enabled Cluster:

/localhost/DC> vsan.disks_info vSAN65/hosts/vesx1.virten.lab/ Gathering disk information for host vesx1.virten.lab Done gathering disk information Disks on host vesx1.virten.lab: +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+ | DisplayName | isSSD | Size | State | +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+ | Local NVMe Disk (t10.NVMe_Samsung_SSD_950_PRO) | SSD | 256 GB | inUse | | NVMe Samsung SSD 950 | | | vSAN Format Version: v5 | | | | | | | | | | Adapters: | | | | | vmhba0 (nvme) | | | | | Samsung Electronics Co Ltd NVMe SSD Controller | +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+ | Local NVMe Disk (t10.NVMe_Samsung_SSD_950_PRO) | SSD | 512 GB | inUse | | NVMe Samsung SSD 950 | | | vSAN Format Version: v5 | | | | | | | | | | Adapters: | | | | | vmhba0 (nvme) | | | | | Samsung Electronics Co Ltd NVMe SSD Controller | +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+ | Local NVMe Disk (t10.NVMe_Samsung_SSD_950_PRO) | SSD | 512 GB | inUse | | NVMe Samsung SSD 950 | | | vSAN Format Version: v5 | | | | | | | | | | Adapters: | | | | | vmhba0 (nvme) | | | | | Samsung Electronics Co Ltd NVMe SSD Controller | +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+ | Local USB Direct-Access (mpx.vmhba32:C0:T0:L0) | SSD | 16 GB | ineligible (Existing partitions found on disk 'mpx.vmhba0:C0:T0:L0'.) | | Kingston DataTraveler 2.0 | | | | | | | | Partition table: | | | | | 5: 0.24 GB, type = vfat | | | | | 6: 0.24 GB, type = vfat | | | | | 7: 0.11 GB, type = coredump | | | | | 8: 0.28 GB, type = vfat | | | | | 9: 2.50 GB, type = coredump | | | | | | | | | | Adapters: | | | | | vmhba32 (usb-storage) | | | | | USB | +------------------------------------------------+-------+--------+-----------------------------------------------------------------------+

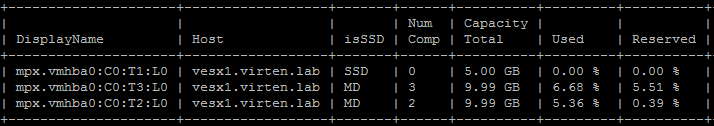

vsan.disks_stats ~cluster|~host

Display information about the disks used in a host or cluster including:

- Disk Type (SSD or MD)

- Number of components reside on the disk

- Disk capacity

- Percentage of used capacity

- Space reservations (via the ObjectSpaceReservation policy)

- Health

- On-disk format

Example 1 – Show all disks in a cluster:

/localhost/DC> vsan.disks_stats ~cluster Fetching vSAN disk info from vesx3.virten.lab (may take a moment) ... Fetching vSAN disk info from vesx2.virten.lab (may take a moment) ... Fetching vSAN disk info from vesx1.virten.lab (may take a moment) ... Done fetching vSAN disk infos +---------------------+------------------+-------+------+----------+---------+----------+----------+----------+----------+----------+---------+----------+---------+ | | | | Num | Capacity | | | Physical | Physical | Physical | Logical | Logical | Logical | Status | | DisplayName | Host | isSSD | Comp | Total | Used | Reserved | Capacity | Used | Reserved | Capacity | Used | Reserved | Health | +---------------------+------------------+-------+------+----------+---------+----------+----------+----------+----------+----------+---------+----------+---------+ | mpx.vmhba0:C0:T1:L0 | vesx1.virten.lab | SSD | 0 | 5.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T3:L0 | vesx1.virten.lab | MD | 3 | 9.99 GB | 6.68 % | 5.51 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T2:L0 | vesx1.virten.lab | MD | 2 | 9.99 GB | 5.36 % | 0.39 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | +---------------------+------------------+-------+------+----------+---------+----------+----------+----------+----------+----------+---------+----------+---------+ | mpx.vmhba0:C0:T1:L0 | vesx2.virten.lab | SSD | 0 | 5.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T2:L0 | vesx2.virten.lab | MD | 2 | 9.99 GB | 32.06 % | 30.69 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T3:L0 | vesx2.virten.lab | MD | 3 | 9.99 GB | 10.16 % | 5.51 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | +---------------------+------------------+-------+------+----------+---------+----------+----------+----------+----------+----------+---------+----------+---------+ | mpx.vmhba0:C0:T1:L0 | vesx3.virten.lab | SSD | 0 | 5.00 GB | 0.00 % | 0.00 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T2:L0 | vesx3.virten.lab | MD | 2 | 9.99 GB | 35.77 % | 30.69 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | | mpx.vmhba0:C0:T3:L0 | vesx3.virten.lab | MD | 3 | 9.99 GB | 5.16 % | 0.47 % | N/A | N/A | N/A | N/A | N/A | N/A | OK (v5) | +---------------------+------------------+-------+------+----------+---------+----------+----------+----------+----------+----------+---------+----------+---------+

vsan.cmmds_find [-t|-u|-o] ~cluster|~host

Display information about an object or component in the vSAN, when only the UUID is known. A powerful command to find objects and detailed object information. Can be used against hosts or clusters. Usage against clusters is recommended to resolve UUID into readable names.

- -t, --type: CMMDS type, e.g. DOM_OBJECT, LSOM_OBJECT, POLICY, DISK etc.

- -u, --uuid: UUID of the entry.

- -o, --owner: UUID of the owning node.

Types:

- DISK –represents a magnetic disk or flash device

- DOM_OBJECT – represents composite objects

- POLICY type – represents a policy

- LSOM_OBJECT – represents a component

Example 1 - List all Disks in VSAN:

/localhost/DC> vsan.cmmds_find ~cluster -t DISK

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| 1 | DISK | 520187e9-9a07-3d0c-04b0-dd5bb0f4df04 | vesx1.virten.lab | Healthy | {"capacity"=>1048576, |

| | | | | | "iops"=>20000, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>2000000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>1, |

| | | | | | "ssdUuid"=>"520187e9-9a07-3d0c-04b0-dd5bb0f4df04", |

| | | | | | "volumeName"=>"NA", |

| | | | | | "formatVersion"=>"5", |

| | | | | | "devName"=>"mpx.vmhba0:C0:T1:L0:2", |

| | | | | | "ssdCapacity"=>5365546496, |

| | | | | | "rdtMuxGroup"=>0, |

| | | | | | "isAllFlash"=>1, |

| | | | | | "maxComponents"=>0, |

| | | | | | "logicalCapacity"=>0, |

| | | | | | "physDiskCapacity"=>0, |

| | | | | | "dedupScope"=>0, |

| | | | | | "dedupMetadata"=>0, |

| | | | | | "isEncrypted"=>0} |

| 2 | DISK | 52678934-7d90-d712-61c6-6919990a18f3 | vesx1.virten.lab | Healthy | {"capacity"=>10729029632, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

[...]

Example 2 - List all disks from a specific ESXi Host. Identify the hosts UUID (Node UUID) with vsan.host_info:

/localhost/DC> vsan.host_info ~esx

Fetching host info from vesx1.virten.lab (may take a moment) ...

Product: VMware ESXi 6.5.0 build-5310538

vSAN enabled: yes

Cluster info:

Cluster role: agent

Cluster UUID: 520e0160-c109-abd2-45af-f5378f18f74a

Node UUID: 58a4a441-1c1a-4243-b64c-005056b968bd

Member UUIDs: ["58a4a3da-1284-ce11-70a5-005056b9f17c", "58a4a4f2-c6ed-db2a-0b14-005056b90377", "58a4a441-1c1a-4243-b64c-005056b968bd"] (3)

Node evacuated: no

Storage info:

Auto claim: yes

Disk Mappings:

SSD: Local VMware Disk (mpx.vmhba0:C0:T1:L0) - 5 GB, v5

MD: Local VMware Disk (mpx.vmhba0:C0:T3:L0) - 10 GB, v5

MD: Local VMware Disk (mpx.vmhba0:C0:T2:L0) - 10 GB, v5

FaultDomainInfo:

Hamburg

NetworkInfo:

Adapter: vmk2 (10.100.0.121)

/localhost/DC> vsan.cmmds_find ~cluster -t DISK -o 58a4a441-1c1a-4243-b64c-005056b968bd

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| 1 | DISK | 520187e9-9a07-3d0c-04b0-dd5bb0f4df04 | vesx1.virten.lab | Healthy | {"capacity"=>1048576, |

| | | | | | "iops"=>20000, |

| | | | | | "iopsWritePenalty"=>10000000, |

| | | | | | "throughput"=>200000000, |

| | | | | | "throughputWritePenalty"=>0, |

| | | | | | "latency"=>3400000, |

| | | | | | "latencyDeviation"=>0, |

| | | | | | "reliabilityBase"=>10, |

| | | | | | "reliabilityExponent"=>15, |

| | | | | | "mtbf"=>2000000, |

| | | | | | "l2CacheCapacity"=>0, |

| | | | | | "l1CacheCapacity"=>16777216, |

| | | | | | "isSsd"=>1, |

| | | | | | "ssdUuid"=>"520187e9-9a07-3d0c-04b0-dd5bb0f4df04", |

| | | | | | "volumeName"=>"NA", |

| | | | | | "formatVersion"=>"5", |

| | | | | | "devName"=>"mpx.vmhba0:C0:T1:L0:2", |

| | | | | | "ssdCapacity"=>5365546496, |

| | | | | | "rdtMuxGroup"=>0, |

| | | | | | "isAllFlash"=>1, |

| | | | | | "maxComponents"=>0, |

| | | | | | "logicalCapacity"=>0, |

| | | | | | "physDiskCapacity"=>0, |

| | | | | | "dedupScope"=>0, |

| | | | | | "dedupMetadata"=>0, |

| | | | | | "isEncrypted"=>0} |

| 2 | DISK | 52678934-7d90-d712-61c6-6919990a18f3 | vesx1.virten.lab | Healthy | {"capacity"=>10729029632, |

| | | | | | "iops"=>100, |

| | | | | | "iopsWritePenalty"=>10000000, |

Example 3 - List DOM Objects from a specific ESXi Host:

/localhost/DC> vsan.cmmds_find ~cluster -t DOM_OBJECT -o 58a4a4f2-c6ed-db2a-0b14-005056b90377

+---+------------+--------------------------------------+------------------+---------+---------------------------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------------+--------------------------------------+------------------+---------+---------------------------------------------------------------------+

| 1 | DOM_OBJECT | da00a658-f668-f186-af1c-005056b9f17c | vesx3.virten.lab | Healthy | {"type"=>"Configuration", |

| | | | | | "attributes"=> |

| | | | | | {"CSN"=>70, |

| | | | | | "SCSN"=>74, |

| | | | | | "addressSpace"=>273804165120, |

| | | | | | "scrubStartTime"=>1487274202730650, |

| | | | | | "objectVersion"=>5, |

| | | | | | "highestDiskVersion"=>5, |

| | | | | | "muxGroup"=>412332854759424, |

| | | | | | "groupUuid"=>"da00a658-f668-f186-af1c-005056b9f17c", |

| | | | | | "compositeUuid"=>"da00a658-f668-f186-af1c-005056b9f17c", |

| | | | | | "objClass"=>2}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"RAID_1", |

| | | | | | "attributes"=>{"scope"=>3}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"capacity"=>[0, 273804165120], |

| | | | | | "addressSpace"=>273804165120, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1496855824, |

| | | | | | "faultDomainId"=>"58a4a4f2-c6ed-db2a-0b14-005056b90377", |

| | | | | | "lastScrubbedOffset"=>314769408, |

| | | | | | "subFaultDomainId"=>"58a4a4f2-c6ed-db2a-0b14-005056b90377", |

| | | | | | "objClass"=>2}, |

| | | | | | "componentUuid"=>"da00a658-c6d2-6c87-8146-005056b9f17c", |

| | | | | | "diskUuid"=>"522a4211-57ee-f31f-3a84-18a75a0c0ff3"}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"capacity"=>[0, 273804165120], |

| | | | | | "addressSpace"=>273804165120, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1496855824, |

| | | | | | "faultDomainId"=>"35d7df6e-d3d9-3be2-927d-14acc5f1fc9a", |

| | | | | | "lastScrubbedOffset"=>314703872, |

| | | | | | "subFaultDomainId"=>"58a4a441-1c1a-4243-b64c-005056b968bd", |

| | | | | | "objClass"=>2}, |

| | | | | | "componentUuid"=>"0b4ef658-56ca-6b29-f5fa-005056b9f17c", |

| | | | | | "diskUuid"=>"52678934-7d90-d712-61c6-6919990a18f3"}}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Witness", |

| | | | | | "attributes"=> |

| | | | | | {"componentState"=>5, |

| | | | | | "componentStateTS"=>1496855762, |

| | | | | | "isWitness"=>1, |

| | | | | | "faultDomainId"=>"58a4a3da-1284-ce11-70a5-005056b9f17c", |

| | | | | | "subFaultDomainId"=>"58a4a3da-1284-ce11-70a5-005056b9f17c"}, |

| | | | | | "componentUuid"=>"0e4ef658-2c46-6ba4-c422-005056b9f17c", |

| | | | | | "diskUuid"=>"52e3ae7e-b744-796d-de9f-ff73b0cd4df9"}} |

| 2 | DOM_OBJECT | dc00a658-204b-db99-8337-005056b9f17c | vesx3.virten.lab | Healthy | {"type"=>"Configuration", |

| | | | | | "attributes"=> |

| | | | | | {"CSN"=>60, |

| | | | | | "SCSN"=>63, |

| | | | | | "addressSpace"=>6442450944, |

| | | | | | "scrubStartTime"=>1497097171666092, |

| | | | | | "objectVersion"=>5, |

| | | | | | "highestDiskVersion"=>5, |

| | | | | | "muxGroup"=>412332854759424, |

| | | | | | "groupUuid"=>"da00a658-f668-f186-af1c-005056b9f17c", |

| | | | | | "compositeUuid"=>"dc00a658-204b-db99-8337-005056b9f17c"}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"RAID_1", |

| | | | | | "attributes"=>{"scope"=>3}, |

| | | | | | "child-1"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"addressSpace"=>6442450944, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1496855782, |

| | | | | | "faultDomainId"=>"58a4a3da-1284-ce11-70a5-005056b9f17c", |

| | | | | | "subFaultDomainId"=>"58a4a3da-1284-ce11-70a5-005056b9f17c"}, |

| | | | | | "componentUuid"=>"dc00a658-0ee2-6a9a-d2a0-005056b9f17c", |

| | | | | | "diskUuid"=>"529873dc-6f15-bf2c-51a2-be0b4f6e755c"}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Component", |

| | | | | | "attributes"=> |

| | | | | | {"addressSpace"=>6442450944, |

| | | | | | "componentState"=>5, |

| | | | | | "componentStateTS"=>1496855782, |

| | | | | | "faultDomainId"=>"58a4a4f2-c6ed-db2a-0b14-005056b90377", |

| | | | | | "subFaultDomainId"=>"58a4a4f2-c6ed-db2a-0b14-005056b90377"}, |

| | | | | | "componentUuid"=>"dc00a658-dc2d-6c9a-f76b-005056b9f17c", |

| | | | | | "diskUuid"=>"526bc1a4-1d10-6783-3216-7fed84c9d71f"}}, |

| | | | | | "child-2"=> |

| | | | | | {"type"=>"Witness", |

| | | | | | "attributes"=> |

| | | | | | {"componentState"=>5, |

| | | | | | "componentStateTS"=>1496855824, |

| | | | | | "isWitness"=>1, |

| | | | | | "faultDomainId"=>"58a4a441-1c1a-4243-b64c-005056b968bd", |

| | | | | | "subFaultDomainId"=>"58a4a441-1c1a-4243-b64c-005056b968bd"}, |

| | | | | | "componentUuid"=>"dc00a658-5eff-6c9a-215e-005056b9f17c", |

| | | | | | "diskUuid"=>"52678934-7d90-d712-61c6-6919990a18f3"}} |

| 3 | DOM_OBJECT | 593d3859-1c83-25fa-6deb-005056b90377 | vesx3.virten.lab | Healthy | {"type"=>"Configuration", |

| | | | | | "attributes"=> |

| | | | | | {"CSN"=>4, |

| | | | | | "addressSpace"=>536870912, |

[...]

Example 4 - List LSOM Objects (Components) from a specific ESXi Host:

/localhost/DC> vsan.cmmds_find ~cluster -t LSOM_OBJECT -o 58a4a4f2-c6ed-db2a-0b14-005056b90377

+---+-------------+--------------------------------------+------------------+---------+-----------------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+-------------+--------------------------------------+------------------+---------+-----------------------------------------------------------+

| 1 | LSOM_OBJECT | b38df658-6ce8-3939-b551-005056b9f17c | vesx3.virten.lab | Healthy | {"diskUuid"=>"522a4211-57ee-f31f-3a84-18a75a0c0ff3", |

| | | | | | "compositeUuid"=>"b38df658-36af-a138-68a2-005056b9f17c", |

| | | | | | "capacityUsed"=>3292528640, |

| | | | | | "physCapacityUsed"=>2508193792, |

| | | | | | "dedupUniquenessMetric"=>100} |

| 2 | LSOM_OBJECT | b28df658-5a55-9e82-0d22-005056b9f17c | vesx3.virten.lab | Healthy | {"diskUuid"=>"526bc1a4-1d10-6783-3216-7fed84c9d71f", |

| | | | | | "compositeUuid"=>"b28df658-c089-2d82-5649-005056b9f17c", |

| | | | | | "capacityUsed"=>373293056, |

| | | | | | "physCapacityUsed"=>369098752, |

| | | | | | "dedupUniquenessMetric"=>100} |

| 3 | LSOM_OBJECT | da00a658-c6d2-6c87-8146-005056b9f17c | vesx3.virten.lab | Healthy | {"diskUuid"=>"522a4211-57ee-f31f-3a84-18a75a0c0ff3", |

| | | | | | "compositeUuid"=>"da00a658-f668-f186-af1c-005056b9f17c", |

| | | | | | "capacityUsed"=>402653184, |

| | | | | | "physCapacityUsed"=>398458880, |

| | | | | | "dedupUniquenessMetric"=>100} |

| 4 | LSOM_OBJECT | dc00a658-dc2d-6c9a-f76b-005056b9f17c | vesx3.virten.lab | Healthy | {"diskUuid"=>"526bc1a4-1d10-6783-3216-7fed84c9d71f", |

| | | | | | "compositeUuid"=>"dc00a658-204b-db99-8337-005056b9f17c", |

| | | | | | "capacityUsed"=>12582912, |

| | | | | | "physCapacityUsed"=>4194304, |

| | | | | | "dedupUniquenessMetric"=>100} |

| 5 | LSOM_OBJECT | 593d3859-885e-8afa-a085-005056b90377 | vesx3.virten.lab | Healthy | {"diskUuid"=>"526bc1a4-1d10-6783-3216-7fed84c9d71f", |

| | | | | | "compositeUuid"=>"593d3859-1c83-25fa-6deb-005056b90377", |

| | | | | | "capacityUsed"=>12582912, |

| | | | | | "physCapacityUsed"=>4194304, |

| | | | | | "dedupUniquenessMetric"=>100} |

+---+-------------+--------------------------------------+------------------+---------+-----------------------------------------------------------+

vsan.vm_object_info [-c|-p|-i] ~vm

Prints VSAN object information about a VM. This command is the equivalent to the Manage > VM Storage Policies tab in the vSphere Web Client and allows you to identify where stripes, mirrors and witness of virtual disks are located. The command retains information about:

- Namespace directory (Virtual Machine home directory)

- Disk backing (Virtual Disks)

- Component layout (RAID-0, RAID-1)

- Number of objects (DOM Objects)

- UUID from objects and components (useful for other commands)

- Location of object stripes and mirrors

- Location of object witness

- Storage Policy (hostFailuresToTolerate, forceProvisioning, stripeWidth, etc.)

- Resync Status

Usage:

- -c, --cluster: Cluster on which to fetch the object info

- -p, --perspective-from-host: Host to query object info from

- -i, --include-detailed-usage: Include detailed usage info

Example 1 - Print physical location and component layout of a DOM Object:

/localhost/DC> vsan.vm_object_info ~vm

VM vMA:

Namespace directory

DOM Object: b28df658-c089-2d82-5649-005056b9f17c (v5, owner: vesx2.virten.lab, proxy owner: None, policy: spbmProfileId = aa6d5a82-1c88-45da-85d3-3d74b91a5bad, spbmProfileGenerationNumber = 0, stripeWidth = 1, SCSN = 41, hostFailuresToTolerate = 1, forceProvisioning = 0, CSN = 36, spbmProfileName = Virtual SAN Default Storage Policy, cacheReservation = 0, proportionalCapacity = [0, 100])

RAID_1

Component: b28df658-5a55-9e82-0d22-005056b9f17c (state: ACTIVE (5), host: vesx3.virten.lab, md: mpx.vmhba0:C0:T3:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.3 GB, proxy component: false)

Component: b28df658-16bd-9f82-d9c4-005056b9f17c (state: ACTIVE (5), host: vesx2.virten.lab, md: mpx.vmhba0:C0:T3:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.3 GB, proxy component: false)

Witness: b28df658-707d-a082-ff91-005056b9f17c (state: ACTIVE (5), host: vesx1.virten.lab, md: mpx.vmhba0:C0:T3:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.0 GB, proxy component: false)

Disk backing: [vsanDatastore] b28df658-c089-2d82-5649-005056b9f17c/vMA.vmdk

DOM Object: b38df658-36af-a138-68a2-005056b9f17c (v5, owner: vesx2.virten.lab, proxy owner: None, policy: spbmProfileId = aa6d5a82-1c88-45da-85d3-3d74b91a5bad, spbmProfileGenerationNumber = 0, stripeWidth = 1, SCSN = 36, hostFailuresToTolerate = 1, forceProvisioning = 0, CSN = 30, spbmProfileName = Virtual SAN Default Storage Policy, cacheReservation = 0, proportionalCapacity = 100)

RAID_1

Component: b38df658-66b6-3839-295a-005056b9f17c (state: ACTIVE (5), host: vesx2.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 3.1 GB, proxy component: false)

Component: b38df658-6ce8-3939-b551-005056b9f17c (state: ACTIVE (5), host: vesx3.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 3.1 GB, proxy component: false)

Witness: b38df658-0c9d-3a39-9867-005056b9f17c (state: ACTIVE (5), host: vesx1.virten.lab, md: mpx.vmhba0:C0:T3:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.0 GB, proxy component: false)

vsan.disk_object_info ~cluster [disk_uuid]

Prints all objects that are located on a physical disk. This command helps during troubleshooting when you want to identify all objects on a physical disk. You have to know the disk UUID which can be identified with the vsan.cmmds_find command.

Example 1 – Get Disk UUID with vsan.cmmds_find and display all objects located on this disk:

/localhost/DC> vsan.cmmds_find ~cluster -t DISK

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| # | Type | UUID | Owner | Health | Content |

+---+------+--------------------------------------+------------------+---------+-----------------------------------------------------+

| 1 | DISK | 521eb724-1c85-50bf-0640-65995452ee8b | vesx3.virten.lab | Healthy | {"capacity"=>1048576, |

| | | | | | "iops"=>20000, |

[...]

/localhost/DC> vsan.disk_object_info ~cluster 521eb724-1c85-50bf-0640-65995452ee8b

Physical disk mpx.vmhba0:C0:T1:L0 (521eb724-1c85-50bf-0640-65995452ee8b):

DOM Object: b38df658-36af-a138-68a2-005056b9f17c (v5, owner: vesx2.virten.lab, proxy owner: None, policy: spbmProfileId = aa6d5a82-1c88-45da-85d3-3d74b91a5bad, hostFailuresToTolerate = 1, cacheReservation = 0, proportionalCapacity = 100, spbmProfileGenerationNumber = 0, forceProvisioning = 0, SCSN = 36, CSN = 30, stripeWidth = 1, spbmProfileName = Virtual SAN Default Storage Policy)

Context: Part of VM vMA: Disk: [vsanDatastore] b28df658-c089-2d82-5649-005056b9f17c/vMA.vmdk

RAID_1

Component: b38df658-66b6-3839-295a-005056b9f17c (state: ACTIVE (5), host: vesx2.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 3.1 GB, proxy component: false)

Component: b38df658-6ce8-3939-b551-005056b9f17c (state: ACTIVE (5), host: vesx3.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: **mpx.vmhba0:C0:T1:L0**,

votes: 1, usage: 3.1 GB, proxy component: false)

Witness: b38df658-0c9d-3a39-9867-005056b9f17c (state: ACTIVE (5), host: vesx1.virten.lab, md: mpx.vmhba0:C0:T3:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.0 GB, proxy component: false)

DOM Object: da00a658-f668-f186-af1c-005056b9f17c (v5, owner: vesx3.virten.lab, proxy owner: None, policy: spbmProfileId = aa6d5a82-1c88-45da-85d3-3d74b91a5bad, hostFailuresToTolerate = 1, cacheReservation = 0, proportionalCapacity = [0, 100], spbmProfileGenerationNumber = 0, forceProvisioning = 0, SCSN = 74, spbmProfileName = Virtual SAN Default Storage Policy, CSN = 70, stripeWidth = 1, objectVersion = 5)

Context: Part of VM testvm: Namespace directory

RAID_1

Component: da00a658-c6d2-6c87-8146-005056b9f17c (state: ACTIVE (5), host: vesx3.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: **mpx.vmhba0:C0:T1:L0**,

votes: 1, usage: 0.4 GB, proxy component: false)

Component: 0b4ef658-56ca-6b29-f5fa-005056b9f17c (state: ACTIVE (5), host: vesx1.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.4 GB, proxy component: false)

Witness: 0e4ef658-2c46-6ba4-c422-005056b9f17c (state: ACTIVE (5), host: vesx2.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0,

votes: 1, usage: 0.0 GB, proxy component: false)

DOM Object: b28df658-c089-2d82-5649-005056b9f17c (v5, owner: vesx2.virten.lab, proxy owner: None, policy: spbmProfileId = aa6d5a82-1c88-45da-85d3-3d74b91a5bad, hostFailuresToTolerate = 1, cacheReservation = 0, proportionalCapacity = [0, 100], spbmProfileGenerationNumber = 0, forceProvisioning = 0, SCSN = 41, CSN = 36, stripeWidth = 1, spbmProfileName = Virtual SAN Default Storage Policy)

Context: Part of VM vMA: Namespace directory

[...]

vsan.object_info [-s|-i] ~cluster [obj_uuid]

Prints informations about objects physical location and configuration. The command output is very similar to the vsan.vm_object_info but it is used against a single object.

- -s, --skip-ext-attr: Don't fetch extended attributes

- -i, --include-detailed-usage: Include detailed usage info

Example 1 - Print physical location of a DOM Object:

/localhost/DC> vsan.object_info ~cluster 7e62c152-7dfb-c6e5-07b8-001b2193b9a4

Fetching VSAN disk info from vesx1.virten.lab (may take a moment) ...

Fetching VSAN disk info from vesx2.virten.lab (may take a moment) ...

Fetching VSAN disk info from vesx3.virten.lab (may take a moment) ...

Done fetching VSAN disk infos

DOM Object: 7e62c152-7dfb-c6e5-07b8-001b2193b9a4 (owner: vesx1.virten.lab, policy: hostFailuresToTolerate = 1, forceProvisioning = 1, proportionalCapacity = 100)

Witness: c135c452-cd77-0733-1708-001b2193b9a4 (state: ACTIVE (5), host: vesx3.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0)

RAID_1

Component: c135c452-2f04-0533-dbbc-001b2193b9a4 (state: ACTIVE (5), host: vesx1.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0)

Component: 7e62c152-763d-1400-2b06-001b2193b9a4 (state: ACTIVE (5), host: vesx2.virten.lab, md: mpx.vmhba0:C0:T2:L0, ssd: mpx.vmhba0:C0:T1:L0)

vsan.object_reconfigure [-p] ~cluster [obj_uuid]

Configure an object with a new policy. To use this command, you need to know the object UUID which can be identified with vsan.cmmds_find or vsan.vm_object_info.

- -p, --New policy: New policy

Available policy options are:

- hostFailuresToTolerate (Number of failures to tolerate)

- forceProvisioning (If VSAN can't fulfill the policy requirements for an object, it will still deploy it)

- stripeWidth (Number of disk stripes per object)

- cacheReservation (Flash read cache reservation)

- proportionalCapacity (Object space reservation)

Be careful to keep existing policies. Always specify all options. The policy has to be defined in the following format:

'(("hostFailuresToTolerate" i1) ("forceProvisioning" i1))'

Example 1 - Change the disk policy to tolerate 2 host failures. Current policy is hostFailuresToTolerate = 1, stripeWidth = 1

/localhost/DC> vsan.object_reconfigure ~cluster 5078bd52-2977-8cf9-107c-00505687439c -p '(("hostFailuresToTolerate" i2) ("stripeWidth" i1))'

Example 2 - Disable Force provisioning. Current policy is hostFailuresToTolerate = 1, stripeWidth = 1

/localhost/DC> vsan.object_reconfigure ~cluster 5078bd52-2977-8cf9-107c-00505687439c -p '(("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i0))'

Example 3 - Change the disk policy to tolerate 2 host failures. Current policy is hostFailuresToTolerate = 1, stripeWidth = 1

/localhost/DC> vsan.vm_object_info ~vm

VM perf1:

Namespace directory

[...]

Disk backing: [vsanDatastore] 6978bd52-4d92-05ed-dad2-005056871792/vma.virten.lab.vmdk

DOM Object: 7e78bd52-7595-1716-85a2-005056871792 (owner: esx1.virtenlab, policy: hostFailuresToTolerate = 1, stripeWidth = 2, forceProvisioning = 1)

Witness: aee5bd52-7443-177b-74a8-005056871792 (state: ACTIVE (5), host: esx2.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_1

RAID_0

Component: 36debd52-7390-a05d-9225-005056871792 (state: ACTIVE (5), esx3.virten.lab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 36debd52-a9b8-965d-03a6-005056871792 (state: ACTIVE (5), esx3.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

RAID_0

Component: 7f78bd52-2d59-c558-09f9-005056871792 (state: ACTIVE (5), esx1.virten.lab, md: mpx.vmhba1:C0:T2:L0, ssd: mpx.vmhba1:C0:T1:L0)

Component: 7f78bd52-d827-c458-9d94-005056871792 (state: ACTIVE (5), esx1.virten.lab, md: mpx.vmhba1:C0:T4:L0, ssd: mpx.vmhba1:C0:T1:L0)

/localhost/DC> vsan.object_reconfigure ~cluster 7e78bd52-7595-1716-85a2-005056871792 -p '(("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i1))'

Reconfiguring '7e78bd52-7595-1716-85a2-005056871792' to (("hostFailuresToTolerate" i1) ("stripeWidth" i1) ("forceProvisioning" i1))

All reconfigs initiated. Synching operation may be happening in the background

vsan.vmdk_stats ~cluster|~host ~vm

Display read cache and capacity stats for Virtual Machines and VMDKs.

Example 1 – Display VM disk statistics:

/localhost/DC> vsan.vmdk_stats ~cluster vSAN65/resourcePool/vms/vMA/ Fetching general information about cluster Fetching general information about VMs Fetching information about vSAN objects Fetching vSAN stats Done fetching info, drawing table +---------------------------------------------------------------+-----------+---------------+-----------+----------+----------+ | | Disk Capacity (in GB) | Read Cache (in GB) | +---------------------------------------------------------------+-----------+---------------+-----------+----------+----------+ | Disk Name | Disk Size | Used Capacity | Data Size | Used | Reserved | +---------------------------------------------------------------+-----------+---------------+-----------+----------+----------+ | vMA | | | | | | | [vsanDatastore] b28df658-c089-2d82-5649-005056b9f17c/vMA.vmdk | 3.0 | 6.0 (2.0x) | 4.7 | 0.0 | 0.0 | +---------------------------------------------------------------+-----------+---------------+-----------+----------+----------+