vSAN 6.6 RVC Guide |

The "vSAN 6.6 RVC Guide" series explains how to manage your VMware Virtual SAN environment with the Ruby vSphere Console. RVC is an interactive command line tool to control and automate your platform. If you are new to RVC, make sure to read the Getting Started with Ruby vSphere Console Guide. All commands are from the latest vSAN 6.6 version.

In the fourth part, I'm working with commands related to the vSAN health plugin. These commands are only available when the Health Services are installed.

- vsan.health.cluster_status

- vsan.health.health_summary

- vsan.health.cluster_rebalance

- vsan.health.cluster_debug_multicast

- vsan.health.multicast_speed_test

- vsan.health.hcl_update_db

- vsan.health.cluster_repair_immediately

- vsan.health.cluster_attach_to_sr

- vsan.health.health_check_interval_status

- vsan.health.health_check_interval_configure

- vsan.health.cluster_proxy_status

- vsan.health.cluster_proxy_configure

- vsan.health.cluster_load_test_run

- vsan.health.cluster_load_test_prepare

- vsan.health.cluster_load_test_cleanup

- vsan.health.silent_health_check_configure

- vsan.health.silent_health_check_status

To shorten commands I've used marks for the vSAN enabled Cluster, a Virtual Machine and an ESXi Hosts participating in the vSAN. This enables me to use ~cluster, ~vm and ~esx in examples:

/localhost/DC> mark cluster /vc.virten.lab/Datacenter/computers/VSAN-Cluster/ /localhost/DC> mark vm /vc.virten.lab/Datacenter/vms/vma.virten.lab /localhost/DC> mark esx /vc.virten.lab/Datacenter/computers/VSAN-Cluster/hosts/esx1.virten.lab/ /localhost/DC> mark vc /vc.virten.lab/

Object Management

vsan.health.cluster_status ~cluster

Verifies that the vSAN health check plugin has been successfully installed in the cluster.

Example 1 – Show vSAN health plugin status:

> vsan.health.cluster_status ~cluster Configuration of ESX vSAN Health Extension: installed (OK) Host 'vesx3.virten.lab' has health system version '6.6.0' installed Host 'vesx2.virten.lab' has health system version '6.6.0' installed Host 'vesx1.virten.lab' has health system version '6.6.0' installed vCenter Server has health system version '6.6.0' installed

vsan.health.health_summary [-c] ~cluster

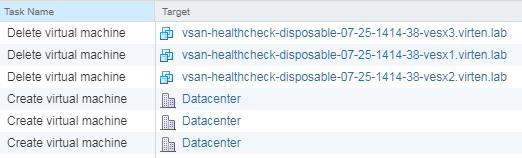

Performs a basic health check on the vSAN Cluster. This is one of the starter commands during troubleshooting. With the -c option you can also verify VM creation on all ESXi hosts in the vSAN Cluster.

- -c, --create-vm-test: performs a proactive VM creation test.

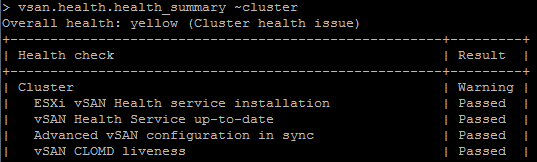

Example 1 – Perform a basic health check:

> vsan.health.health_summary ~cluster Overall health: yellow (Cluster health issue) +------------------------------------------------------+---------+ | Health check | Result | +------------------------------------------------------+---------+ | Cluster | Warning | | ESXi vSAN Health service installation | Passed | | vSAN Health Service up-to-date | Passed | | Advanced vSAN configuration in sync | Passed | | vSAN CLOMD liveness | Passed | | vSAN Disk Balance | Warning | | Resync operations throttling | Passed | | vSAN cluster configuration consistency | Passed | | Time is synchronized across hosts and VC | Passed | | vSphere cluster members match vSAN cluster members | Passed | | Software version compatibility | Passed | | Disk format version | Passed | +------------------------------------------------------+---------+ | Hardware compatibility | Warning | | vSAN HCL DB up-to-date | Warning | | vSAN HCL DB Auto Update | skipped | | SCSI controller is VMware certified | skipped | | Controller is VMware certified for ESXi release | skipped | | Controller driver is VMware certified | skipped | | Controller firmware is VMware certified | skipped | | Controller disk group mode is VMware certified | skipped | +------------------------------------------------------+---------+ | Performance service | Warning | | Performance service status | Warning | +------------------------------------------------------+---------+ | Online health (Last check: 48 minute(s) ago) | Warning | | Customer experience improvement program (CEIP) | Passed | | Online health connectivity | skipped | | Disks usage on storage controller | Passed | | vSAN max component size | Warning | +------------------------------------------------------+---------+ | Network | Passed | | Hosts disconnected from VC | Passed | | Hosts with connectivity issues | Passed | | vSAN cluster partition | Passed | | All hosts have a vSAN vmknic configured | Passed | | All hosts have matching subnets | Passed | | vSAN: Basic (unicast) connectivity check | Passed | | vSAN: MTU check (ping with large packet size) | Passed | | vMotion: Basic (unicast) connectivity check | skipped | | vMotion: MTU check (ping with large packet size) | Passed | | Network latency check | Passed | +------------------------------------------------------+---------+ | Physical disk | Passed | | Overall disks health | Passed | | Metadata health | Passed | | Disk capacity | Passed | | Software state health | Passed | | Congestion | Passed | | Component limit health | Passed | | Component metadata health | Passed | | Memory pools (heaps) | Passed | | Memory pools (slabs) | Passed | +------------------------------------------------------+---------+ | Data | Passed | | vSAN object health | Passed | +------------------------------------------------------+---------+ | Limits | Passed | | Current cluster situation | Passed | | After 1 additional host failure | Passed | | Host component limit | Passed | +------------------------------------------------------+---------+ Details about any failed test below ... Cluster - vSAN Disk Balance: yellow +--------------------+-------+ | Metric | Value | +--------------------+-------+ | Average Disk Usage | 15 % | | Maximum Disk Usage | 35 % | | Maximum Variance | 30 % | | LM Balance Index | 10 % | +--------------------+-------+ +------------------+-----------------------------------------+-------------------------------+--------------+ | Host | Device | Rebalance State | Data To Move | +------------------+-----------------------------------------+-------------------------------+--------------+ | vesx3.virten.lab | Local VMware Disk (mpx.vmhba0:C0:T2:L0) | Proactive rebalance is needed | 1.9889 GB | +------------------+-----------------------------------------+-------------------------------+--------------+ Hardware compatibility - vSAN HCL DB up-to-date: yellow +--------------------------------+---------------------+ | Entity | Time in UTC | +--------------------------------+---------------------+ | Current time | 2017-07-25 14:13:23 | | Local HCL DB copy last updated | 2017-04-25 16:56:42 | +--------------------------------+---------------------+ Hardware compatibility - SCSI controller is VMware certified: skipped +------------------+--------+------------------------------------+--------------+---------------------+----------------------+ | Host | Device | Current controller | Used by vSAN | PCI ID | Controller certified | +------------------+--------+------------------------------------+--------------+---------------------+----------------------+ | vesx2.virten.lab | vmhba0 | VMware Inc. PVSCSI SCSI Controller | Yes | 15ad,07c0,15ad,07c0 | Warning | | vesx1.virten.lab | vmhba0 | VMware Inc. PVSCSI SCSI Controller | Yes | 15ad,07c0,15ad,07c0 | Warning | | vesx3.virten.lab | vmhba0 | VMware Inc. PVSCSI SCSI Controller | Yes | 15ad,07c0,15ad,07c0 | Warning | +------------------+--------+------------------------------------+--------------+---------------------+----------------------+ Hardware compatibility - Controller is VMware certified for ESXi release: skipped +------------------+--------------------------------------------+----------------------+-------------------+-------------------------+ | Host | Device | Current ESXi release | Release supported | Certified ESXi releases | +------------------+--------------------------------------------+----------------------+-------------------+-------------------------+ | vesx2.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | vSAN 6.6 | Warning | N/A | | vesx1.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | vSAN 6.6 | Warning | N/A | | vesx3.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | vSAN 6.6 | Warning | N/A | +------------------+--------------------------------------------+----------------------+-------------------+-------------------------+ Hardware compatibility - Controller driver is VMware certified: skipped +------------------+--------------------------------------------+-----------------------------------+------------------+---------------------+ | Host | Device | Current driver | Driver certified | Recommended drivers | +------------------+--------------------------------------------+-----------------------------------+------------------+---------------------+ | vesx2.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | pvscsi (0.1-1vmw.650.0.0.4564106) | Warning | N/A | | vesx1.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | pvscsi (0.1-1vmw.650.0.0.4564106) | Warning | N/A | | vesx3.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | pvscsi (0.1-1vmw.650.0.0.4564106) | Warning | N/A | +------------------+--------------------------------------------+-----------------------------------+------------------+---------------------+ Hardware compatibility - Controller firmware is VMware certified: skipped +------------------+--------------------------------------------+------------------+--------------------+-----------------------+ | Host | Device | Current firmware | Firmware certified | Recommended firmwares | +------------------+--------------------------------------------+------------------+--------------------+-----------------------+ | vesx2.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | N/A | Warning | N/A | | vesx1.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | N/A | Warning | N/A | | vesx3.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | N/A | Warning | N/A | +------------------+--------------------------------------------+------------------+--------------------+-----------------------+ Hardware compatibility - Controller disk group mode is VMware certified: skipped +------------------+--------------------------------------------+-------------------------+---------------------------+-----------------------------+ | Host | Device | Current disk group mode | Disk group mode certified | Recommended disk group mode | +------------------+--------------------------------------------+-------------------------+---------------------------+-----------------------------+ | vesx2.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | All Flash | Warning | N/A | | vesx1.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | All Flash | Warning | N/A | | vesx3.virten.lab | vmhba0: VMware Inc. PVSCSI SCSI Controller | All Flash | Warning | N/A | +------------------+--------------------------------------------+-------------------------+---------------------------+-----------------------------+ Performance service - Performance service status: yellow +---------+---------------------------------+ | Result | Status | +---------+---------------------------------+ | Warning | Performance service is disabled | +---------+---------------------------------+ Online health (Last check: 48 minute(s) ago) - vSAN max component size: yellow +------------------+--------------------------------+---------+--------------------------------------+----------------------------------+ | Host | Cluster Smallest Disk Size(GB) | Status | Recommended Max Component Size in GB | Current Max Component Size in GB | +------------------+--------------------------------+---------+--------------------------------------+----------------------------------+ | vesx1.virten.lab | 10 | Warning | 180 | 255 | | vesx3.virten.lab | 10 | Warning | 180 | 255 | | vesx2.virten.lab | 10 | Warning | 180 | 255 | +------------------+--------------------------------+---------+--------------------------------------+----------------------------------+ Network - vMotion: Basic (unicast) connectivity check: skipped +-----------+---------+-----------+-------------+ | From Host | To Host | To Device | Ping result | +-----------+---------+-----------+-------------+ +-----------+---------+-----------+-------------+ +------------------+------------------+-----------+-------------+ | From Host | To Host | To Device | Ping result | +------------------+------------------+-----------+-------------+ | vesx2.virten.lab | vesx1.virten.lab | vmk1 | Passed | | vesx2.virten.lab | vesx3.virten.lab | vmk1 | Passed | | vesx1.virten.lab | vesx2.virten.lab | vmk1 | Passed | | vesx1.virten.lab | vesx3.virten.lab | vmk1 | Passed | | vesx3.virten.lab | vesx2.virten.lab | vmk1 | Passed | | vesx3.virten.lab | vesx1.virten.lab | vmk1 | Passed | +------------------+------------------+-----------+-------------+ [[0.070919772, "initial connect"], [2.234612291, "cluster-health"], [0.022485742, "table-render"]]

Example 2 – Perform a proactive VM creation test:

> vsan.health.health_summary ~cluster -c [...] Performing pro-active VM creation test ... +------------------+---------+ | Check | Result | +------------------+---------+ | vesx1.virten.lab | Success | | vesx2.virten.lab | Success | | vesx3.virten.lab | Success | +------------------+---------+

vsan.health.cluster_rebalance ~cluster

This command triggers an immediate rebalance in the vSAN Cluster. The command is similar to vsan.proactive_rebalance. The "vSAN Disk Balance" Health check, which can be viewed with the vsan.health.health_summary command, shows when a rebalancing is required.

Example 1 – Verify and rebalance vSAN disks:

> vsan.health.health_summary ~cluster [...] | vSAN Disk Balance | Warning | [...] Details about any failed test below ... Cluster - vSAN Disk Balance: yellow +--------------------+-------+ | Metric | Value | +--------------------+-------+ | Average Disk Usage | 15 % | | Maximum Disk Usage | 35 % | | Maximum Variance | 30 % | | LM Balance Index | 10 % | +--------------------+-------+ +------------------+-----------------------------------------+-------------------------------+--------------+ | Host | Device | Rebalance State | Data To Move | +------------------+-----------------------------------------+-------------------------------+--------------+ | vesx3.virten.lab | Local VMware Disk (mpx.vmhba0:C0:T2:L0) | Proactive rebalance is needed | 1.9889 GB | +------------------+-----------------------------------------+-------------------------------+--------------+ [...] > vsan.health.cluster_rebalance ~cluster This command will trigger the immediate rebalance of vSAN cluster. It will rebalance the vSAN objects for the imbalance hosts based on the disk usage. This process may take a moment ... vSAN66: success > vsan.health.health_summary ~cluster [...] | vSAN Disk Balance | Passed | [...]

vsan.health.cluster_debug_multicast [-d] ~cluster

This command performs a multicast test. It ensures that all hosts can receive multicast packets. Each host is represented with a character [ABC].

Please note that vSAN 6.6 no longer uses multicast. This test can only be used with older versions.

- -d, --duration: Duration to watch for packets (default: 60)

Example 1 – Debug Multicast:

> vsan.health.cluster_debug_multicast ~cluster 2017-07-25 15:00:06 +0000: Gathering information about hosts and vSAN 2017-07-25 15:00:06 +0000: Watching packets for 60 seconds 2017-07-25 15:00:06 +0000: Got observed packets from all hosts, analysing Automated system couldn't derive any issues. Either no problem exists or manual inspection is required. To further help the network admin, the following is a list of packets with source and destination IPs. As all these packets are multicast, they should have been received by all hosts in the cluster. To show which hosts actually saw the packets, each host is represented by a character (A-Z). If the character is listed in front of the packet, the host received the packet. If the space is left empty, the host didn't receive the packet. A = Host vesx1.virten.lab B = Host vesx2.virten.lab C = Host vesx3.virten.lab

vsan.health.multicast_speed_test ~cluster

Performs a multicast speed test to ensure that there is adequate network bandwidth between all host in the vSAN cluster. Please note that despite multicast is no longer used, the speed test still works in vSAN 6.6.

Example 1 – Perform a multicast speed test:

> vsan.health.multicast_speed_test ~cluster Performing a multicast speed test. One host is selected to send multicast traffic, all other hosts will attempt to receive the packets. The test is designed such that the sender sends more than most physical networks can handle, i.e. it is expected that the physical network may drop packets which then won't be received by the receivers. Assuming a TCP speed test shows good performance, the most likely suspect for failing the multicast speed test are multicast bottlenecks in physical switches. The key question this test tries to answer is: What bandwidth is the receiver able to get? For vSAN to work well, this number should be at least 20MB/s. Typical enterprise environments should be able to do 50MB/s or more. Now running test ... Overall health: Passed +------------------+---------------+---------------------------+--------------------------------------------+ | Host | Health Status | Received Bandwidth (MB/s) | Maximum Achievable Bandwidth Result (MB/s) | +------------------+---------------+---------------------------+--------------------------------------------+ | vesx1.virten.lab | Passed | 63.68 | 125.00 | | vesx3.virten.lab | Passed | 62.58 | 125.00 | +------------------+---------------+---------------------------+--------------------------------------------+

vsan.health.hcl_update_db [-l|-u|-f] ~vc

Updates the HCL database from local file, URL or vmware.com. When the vCenter has internet connectivity, you can run the command without any options. The official site for downloading the HCL file is: http://partnerweb.vmware.com/service/vsan/all.json

- -l, --local-file: Path to local file that contains DB

- -u, --url: Path to URL that contains DB

- -f, --force: Skip any questions, and proceed

Example 1 – Update the HCL database from vmware.com without asking:

> vsan.health.hcl_update_db ~vc -f Updating DB from vmware.com. Note: vCenter needs to have access to vmware.com. Done

vsan.health.cluster_repair_immediately ~cluster

This command triggers an immediate repair of objects that are impacted by components in the ABSENT state, caused by failed hosts or hot-unplugged drives. vSAN will wait 60 minutes by default as in most such cases the failed components will come back. The second category of objects was not repaired previously because under the cluster conditions at the time it wasn't possible. vSAN will periodically recheck those objects. Both types of objects will be instructed to attempt a repair immediately.

Example 1 – Start immediate repair after disk failure:

> vsan.health.cluster_repair_immediately ~cluster vSAN66: success

vsan.health.cluster_attach_to_sr [-s] ~cluster

Performs an automated creation of vSAN related log bundles and uploads them to the support request opened with VMware Global Support Services (GSS).

- -s, --sr=: SR number

Example 1 – Create and upload log bundle:

> > vsan.health.cluster_attach_to_sr -s 99999999 ~cluster vSAN Support Assistant performs automated upload of support bundles, and so does not allow you to review, obfuscate or otherwise edit the contents of your support data prior to it being sent to VMware. If your support data may contain regulated data, such as personal, health care data and/or financial data, you should instead use the more manual workflow by clicking vCenter -> Actions -> Export System Logs selecting 'Include vCenter Server' as well as all ESX hosts in the cluster. Follow VMware KB 2072796 (http://kb.vmware.com/kb/2072796) for the manual workflow. This process may take a moment ... Attaching vSAN support bundle for the cluster 'vSAN66' ... vSAN66: running |------- |

vsan.health.health_check_interval_status ~cluster

Get the current health check interval status. Default interval is 60 minutes.

Example 1 – Display health check interval:

> vsan.health.health_check_interval_status ~cluster +---------+-----------------------+ | Cluster | Health Check Interval | +---------+-----------------------+ | vSAN66 | 60 mins | +---------+-----------------------+

vsan.health.health_check_interval_configure [-i] ~cluster

Configure the health check interval (in minutes) for the cluster. Default is 60 minutes. Set the interval to 0 to disable periodical health checks.

- -i, --interval: Health Check Interval in minutes

Example 1 – Disable periodical health checks:

> vsan.health.health_check_interval_configure -i 0 ~cluster Disabled the periodical health check for vSAN66

Example 2 – Re-enable health checks with a non-default interval:

> vsan.health.health_check_interval_configure -i 120 ~cluster Successfully set the health check interval for vSAN66 to 120 minutes!

vsan.health.cluster_proxy_status ~cluster

When the vCenter does not have access to the Internet, you can configure a proxy that allows Internet connectivity. This is required for health check plugins such as CEIP and HCL database updates.

Example 1 – Display vSAN health service proxy configuration (No proxy used):

> vsan.health.cluster_proxy_status ~cluster/ +---------+------------+------------+------------+ | Cluster | Proxy Host | Proxy Port | Proxy User | +---------+------------+------------+------------+ | vSAN66 | | | | +---------+------------+------------+------------+

vsan.health.cluster_proxy_configure [-o|-p|-u]~cluster

Configure a proxy that allows health check plugins such as CEIP, HCL database updates and Support Assistant to connect to the Internet.

- -o, --host: Proxy host

- -p, --port: Proxy port

- -u, --user: Proxy user

Example 1 – Configure a proxy:

> vsan.health.cluster_proxy_configure -o proxy.virten.lab -p 8080 -u vc ~cluster Enter proxy password (empty for no password): Enter proxy password again: Configure the proxy for the cluster 'vSAN66' ... > > vsan.health.cluster_proxy_status ~cluster +---------+------------------+------------+------------+ | Cluster | Proxy Host | Proxy Port | Proxy User | +---------+------------------+------------+------------+ | vSAN66 | proxy.virten.lab | 8080 | vc | +---------+------------------+------------+------------+

vsan.health.cluster_load_test_run [-r|-t|-d|-a] ~cluster

Runs a storage performance test for the vSAN. There are two options to run the test. You can either run everything in a single step or run the test as 3 distinct steps. The 3 steps are Prepare - Run - Cleanup.

- -r, --runname: Test name

- -t, --type: VMDK workload type

- -d, --duration-sec: Duration for running the load test in second

- -a, --action: Possible actions are 'prepare', 'run', 'cleanup' and 'fullrun'. (Default is fullrun)

Available testing methods:

- Low stress test

- Basic sanity test, focus on Flash cache layer

- Stress test

- Performance characterization - 100% Read, optimal RC usage

- Performance characterization - 100% Write, optimal WB usage

- Performance characterization - 100% read, optimal RC usage after warmup

- Performance characterization - 70/30 read/write mix, realistic, optimal flash cache usage

- Performance characterization - 70/30 read/write mix, high IO size, optimal flash cache usage

- Performance characterization - 100% read, Low RC hit rate / All-Flash demo

- Performance characterization - 100% Streaming reads

- Performance characterization - 100% Streaming writes

Refer to KB2147074 for detailed test specifications.

Example 1 – Perform a "Low Stress test" for 60 seconds:

> vsan.health.cluster_load_test_run -r stresstest -t "Low stress test" -d 60 ~cluster This command will run the VMDK load test for the given cluster If the action is 'fullrun' or not specified, it will do all of steps to run the test including preparing, running and cleaning up. And it will only run the test based on the VMDK which is created by cluster_load_test_prepare if action is 'run'. In this sitution, the VMDK cleanup step is required by calling cluster_load_test_cleanup vSAN66: success VMDK load test completed for the cluster vSAN66: green +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+ | Host | Workload Type | VMDK Disk Number | Duration (sec) | IOPS | Throughput MB/s | Average Latency (ms) | Maximum Latency (ms) | +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+ | vesx2.virten.lab | Low stress test | 0 | 60 | 4822 | 18.84 | 0.21 | 14.79 | | vesx1.virten.lab | Low stress test | 0 | 60 | 2905 | 11.35 | 0.34 | 27.40 | | vesx3.virten.lab | Low stress test | 0 | 60 | 2888 | 11.28 | 0.34 | 32.50 | +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+

Example 2 – Perform a load test in interavtive mode:

> vsan.health.cluster_load_test_run -r stresstest -d 60 ~cluster This command will run the VMDK load test for the given cluster If the action is 'fullrun' or not specified, it will do all of steps to run the test including preparing, running and cleaning up. And it will only run the test based on the VMDK which is created by cluster_load_test_prepare if action is 'run'. In this sitution, the VMDK cleanup step is required by calling cluster_load_test_cleanup 0: Low stress test 1: Basic sanity test, focus on Flash cache layer 2: Stress test 3: Performance characterization - 100% Read, optimal RC usage 4: Performance characterization - 100% Write, optimal WB usage 5: Performance characterization - 100% read, optimal RC usage after warmup 6: Performance characterization - 70/30 read/write mix, realistic, optimal flash cache usage 7: Performance characterization - 70/30 read/write mix, high IO size, optimal flash cache usage 8: Performance characterization - 100% read, Low RC hit rate / All-Flash demo 9: Performance characterization - 100% Streaming reads 10: Performance characterization - 100% Streaming writes Choose the storage workload type [0]: 1 vSAN66: success VMDK load test completed for the cluster vSAN66: green [...]

vsan.health.cluster_load_test_prepare [-r|-t] ~cluster

Set of commands to run a storage performance test for the vSAN. I recommend using the vsan.health.cluster_load_test_run command in "fullrun" mode to perform the test with a single command. If you want to run the load test in separate steps, use this command to prepares a load test.

- -r, --runname: Test name

- -t, --type: VMDK workload type

Example 1 – Prepare a "low stress test":

> vsan.health.cluster_load_test_prepare -r stresstest -t "Low stress test" ~cluster Preparing VMDK test on vSAN66 vSAN66: success Preparing VMDK load test is completed for the cluster vSAN66 with status green +------------------+--------+-------+ | Host | Status | Error | +------------------+--------+-------+ | vesx2.virten.lab | Passed | | | vesx1.virten.lab | Passed | | | vesx3.virten.lab | Passed | | +------------------+--------+-------+

vsan.health.cluster_load_test_cleanup [-r] ~cluster

Set of commands to run a storage performance test for the vSAN. I recommend using the vsan.health.cluster_load_test_run command in "fullrun" mode to perform the test with a single command. If you want to run the load test in separate steps, use this command to clean up after the load test.

- -r, --runname: Test name

Example 1 – Perform a load test in 3 separate steps:

> vsan.health.cluster_load_test_prepare -r stresstest -t "Low stress test" ~cluster Preparing VMDK test on vSAN66 vSAN66: success Preparing VMDK load test is completed for the cluster vSAN66 with status green +------------------+--------+-------+ | Host | Status | Error | +------------------+--------+-------+ | vesx2.virten.lab | Passed | | | vesx1.virten.lab | Passed | | | vesx3.virten.lab | Passed | | +------------------+--------+-------+ > vsan.health.cluster_load_test_run -r stresstest -d 60 -t "Low stress test" -a run ~cluster This command will run the VMDK load test for the given cluster If the action is 'fullrun' or not specified, it will do all of steps to run the test including preparing, running and cleaning up. And it will only run the test based on the VMDK which is created by cluster_load_test_prepare if action is 'run'. In this sitution, the VMDK cleanup step is required by calling cluster_load_test_cleanup vSAN66: success VMDK load test completed for the cluster vSAN66: green +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+ | Host | Workload Type | VMDK Disk Number | Duration (sec) | IOPS | Throughput MB/s | Average Latency (ms) | Maximum Latency (ms) | +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+ | vesx2.virten.lab | Low stress test | 0 | 60 | 4492 | 17.55 | 0.22 | 15.18 | | vesx1.virten.lab | Low stress test | 0 | 60 | 4338 | 16.95 | 0.23 | 16.37 | | vesx3.virten.lab | Low stress test | 0 | 60 | 4167 | 16.28 | 0.24 | 20.39 | +------------------+-----------------+------------------+----------------+------+-----------------+----------------------+----------------------+ > vsan.health.cluster_load_test_cleanup -r stresstest ~cluster Cleaning up VMDK test on cluster vSAN66 vSAN66: success Cleanup VMDK load test is completed for the cluster vSAN66 with status green +------------------+--------+-------+ | Host | Status | Error | +------------------+--------+-------+ | vesx2.virten.lab | Passed | | | vesx1.virten.lab | Passed | | | vesx3.virten.lab | Passed | | +------------------+--------+-------+

vsan.health.silent_health_check_configure [-a|-r|-i|-n] ~cluster

In home labs for examples, when you don't have supported hardware and you still want to have a green health, you can silence health checks. Silenced checks are displayed with a green checkmark and are marked as "Skipped".

Use the vsan.health.silent_health_check_status command to identify the Health Check Id for specific checks or use interactive mode if you don't know Check Ids.

- -a, --add-checks: Add checks to silent list

- -r, --remove-checks: Remove checks from silent list

- -i, --interactive-add: Use interactive mode to add checks to the silent list

- -n, --interactive-remove: Use interactive mode to remove checks from the silent list

Example 1 – Disable HCL related health checks:

> vsan.health.silent_health_check_configure -a controllerdiskmode ~cluster Successfully add check "Controller disk group mode is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerdriver ~cluster Successfully add check "Controller driver is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerfirmware ~cluster Successfully add check "Controller firmware is VMware certified" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controllerreleasesupport ~cluster Successfully add check "Controller is VMware certified for ESXi release" to silent health check list for vSAN66 > vsan.health.silent_health_check_configure -a controlleronhcl ~cluster Successfully add check "SCSI controller is VMware certified" to silent health check list for vSAN66

vsan.health.silent_health_check_status ~cluster

Displays the current silent status for health checks.

Example 1 – Health check list with some disabled checks:

> vsan.health.silent_health_check_status ~cluster Silent Status of Cluster vSAN66: +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Health Check | Health Check Id | Silent Status | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Cloud Health | | | | Controller utility is installed on host | vendortoolpresence | Normal | | Controller with pass-through and RAID disks | mixedmode | Normal | | Customer experience improvement program (CEIP) | vsancloudhealthceipexception | Normal | | Disks usage on storage controller | diskusage | Normal | | Online health connectivity | vsancloudhealthconnectionexception | Silent | | vSAN and VMFS datastores on a Dell H730 controller with the lsi_mr3 driver | mixedmodeh730 | Normal | | vSAN configuration for LSI-3108 based controller | h730 | Normal | | vSAN max component size | smalldiskstest | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Cluster | | | | Advanced vSAN configuration in sync | advcfgsync | Normal | | Deduplication and compression configuration consistency | physdiskdedupconfig | Normal | | Deduplication and compression usage health | physdiskdedupusage | Normal | | Disk format version | upgradelowerhosts | Normal | | ESXi vSAN Health service installation | healtheaminstall | Normal | | Resync operations throttling | resynclimit | Normal | | Software version compatibility | upgradesoftware | Normal | | Time is synchronized across hosts and VC | timedrift | Normal | | vSAN CLOMD liveness | clomdliveness | Normal | | vSAN Disk Balance | diskbalance | Normal | | vSAN Health Service up-to-date | healthversion | Normal | | vSAN cluster configuration consistency | consistentconfig | Normal | | vSphere cluster members match vSAN cluster members | clustermembership | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Data | | | | vSAN VM health | vmhealth | Normal | | vSAN object health | objecthealth | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Encryption | | | | CPU AES-NI is enabled on hosts | hostcpuaesni | Normal | | vCenter and all hosts are connected to Key Management Servers | kmsconnection | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Hardware compatibility | | | | Controller disk group mode is VMware certified | controllerdiskmode | Silent | | Controller driver is VMware certified | controllerdriver | Silent | | Controller firmware is VMware certified | controllerfirmware | Silent | | Controller is VMware certified for ESXi release | controllerreleasesupport | Silent | | Host issues retrieving hardware info | hclhostbadstate | Normal | | SCSI controller is VMware certified | controlleronhcl | Silent | | vSAN HCL DB Auto Update | autohclupdate | Silent | | vSAN HCL DB up-to-date | hcldbuptodate | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Limits | | | | After 1 additional host failure | limit1hf | Normal | | Current cluster situation | limit0hf | Normal | | Host component limit | nodecomponentlimit | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Network | | | | Active multicast connectivity check | multicastdeepdive | Normal | | All hosts have a vSAN vmknic configured | vsanvmknic | Normal | | All hosts have matching multicast settings | multicastsettings | Normal | | All hosts have matching subnets | matchingsubnet | Normal | | Hosts disconnected from VC | hostdisconnected | Normal | | Hosts with connectivity issues | hostconnectivity | Normal | | Multicast assessment based on other checks | multicastsuspected | Normal | | Network latency check | hostlatencycheck | Normal | | vMotion: Basic (unicast) connectivity check | vmotionpingsmall | Silent | | vMotion: MTU check (ping with large packet size) | vmotionpinglarge | Normal | | vSAN cluster partition | clusterpartition | Normal | | vSAN: Basic (unicast) connectivity check | smallping | Normal | | vSAN: MTU check (ping with large packet size) | largeping | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Performance service | | | | All hosts contributing stats | hostsmissing | Normal | | Performance data collection | collection | Normal | | Performance service status | perfsvcstatus | Normal | | Stats DB object | statsdb | Normal | | Stats DB object conflicts | renameddirs | Normal | | Stats master election | masterexist | Normal | | Verbose mode | verbosemode | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Physical disk | | | | Component limit health | physdiskcomplimithealth | Normal | | Component metadata health | componentmetadata | Normal | | Congestion | physdiskcongestion | Normal | | Disk capacity | physdiskcapacity | Normal | | Memory pools (heaps) | lsomheap | Normal | | Memory pools (slabs) | lsomslab | Normal | | Metadata health | physdiskmetadata | Normal | | Overall disks health | physdiskoverall | Normal | | Physical disk health retrieval issues | physdiskhostissues | Normal | | Software state health | physdisksoftware | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | Stretched cluster | | | | Invalid preferred fault domain on witness host | witnesspreferredfaultdomaininvalid | Normal | | Invalid unicast agent | hostwithinvalidunicastagent | Normal | | No disk claimed on witness host | witnesswithnodiskmapping | Normal | | Preferred fault domain unset | witnesspreferredfaultdomainnotexist | Normal | | Site latency health | siteconnectivity | Normal | | Unexpected number of fault domains | clusterwithouttwodatafaultdomains | Normal | | Unicast agent configuration inconsistent | clusterwithmultipleunicastagents | Normal | | Unicast agent not configured | hostunicastagentunset | Normal | | Unsupported host version | hostwithnostretchedclustersupport | Normal | | Witness host fault domain misconfigured | witnessfaultdomaininvalid | Normal | | Witness host not found | clusterwithoutonewitnesshost | Normal | | Witness host within vCenter cluster | witnessinsidevccluster | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+ | vSAN iSCSI target service | | | | Home object | iscsihomeobjectstatustest | Normal | | Network configuration | iscsiservicenetworktest | Normal | | Service runtime status | iscsiservicerunningtest | Normal | +------------------------------------------------------------------------------+-------------------------------------+---------------+